Uri.parse("content://com.android.chrome.browser/bookmarks");

actionData.setEventProperty("calendar", "1");

actionData.setEventProperty("contacts", "1");

actionData.setEventProperty("location", "1");

actionData.setEventProperty("media", "1");

actionData.setEventProperty("phone", "1");

actionData.setEventProperty("sms", "1");

ActivityCompat.checkSelfPermission(context, "android.permission.READ_PHONE_STATE")

IntentFilter.addAction("android.intent.action.USER_PRESENT");

IntentFilter.addAction("android.intent.action.SCREEN_OFF");

IntentFilter.addAction("android.intent.action.SCREEN_ON");

public static final String ACS_EVENING_PRIME_TIME_BEGIN_DEFAULT = "19:00";

public static final String ACS_EVENING_PRIME_TIME_END_DEFAULT = "22:00";

public static final String ACS_MORNING_PRIME_TIME_BEGIN_DEFAULT = "06:00";

public static final String ACS_MORNING_PRIME_TIME_END_DEFAULT = "09:00";

audioRecord = new AudioRecord(

audioSettings.getAudioSource(),

audioSettings.getSampleRate(),

audioSettings.getChannelInConfig(),

audioSettings.getEncoding(),

audioSettings.getBufferInSize());

short[] tempBuffer = new short[audioSettings.getBufferSize()];

do {

audioRecord.read(tempBuffer, 0, audioSettings.getBufferSize());

} while (true);

ssize_t AudioRecord::read(void* buffer, size_t userSize)

memcpy(buffer, audioBuffer.i8, bytesRead);

read += bytesRead;

return read;

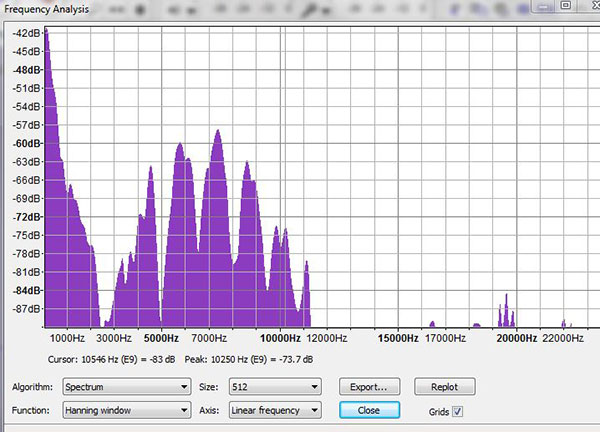

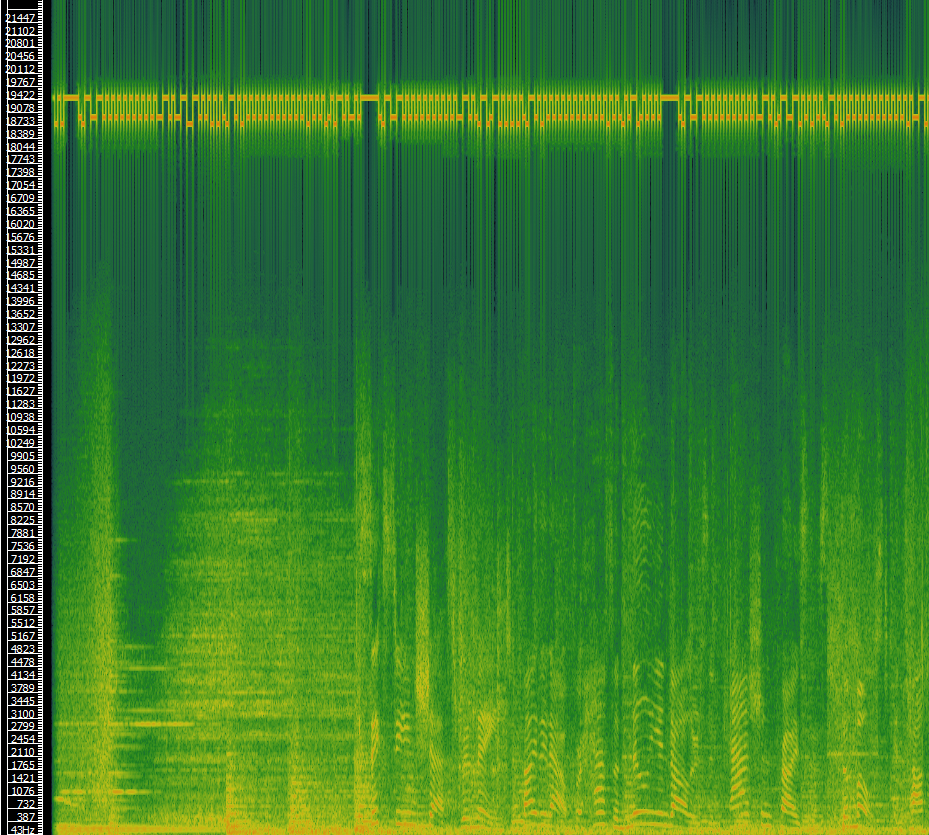

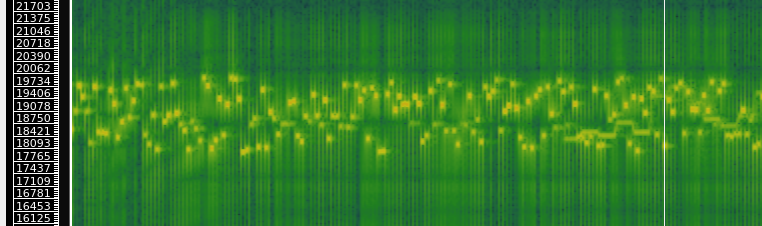

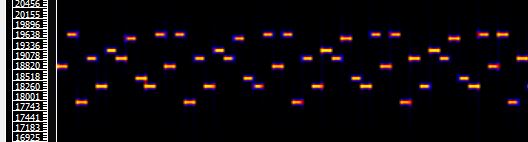

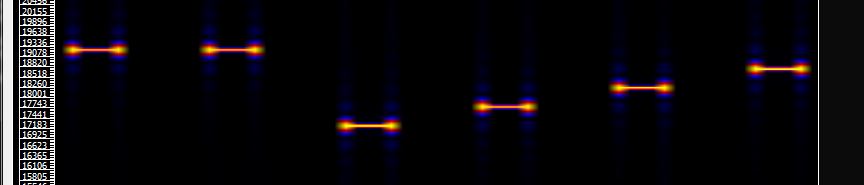

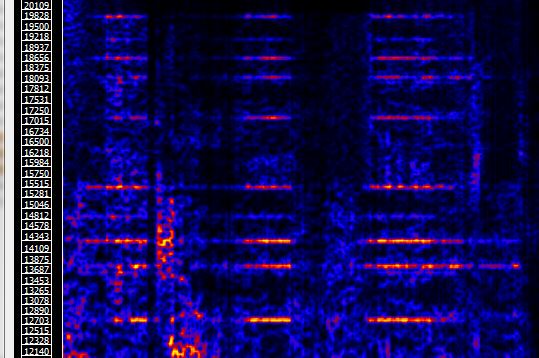

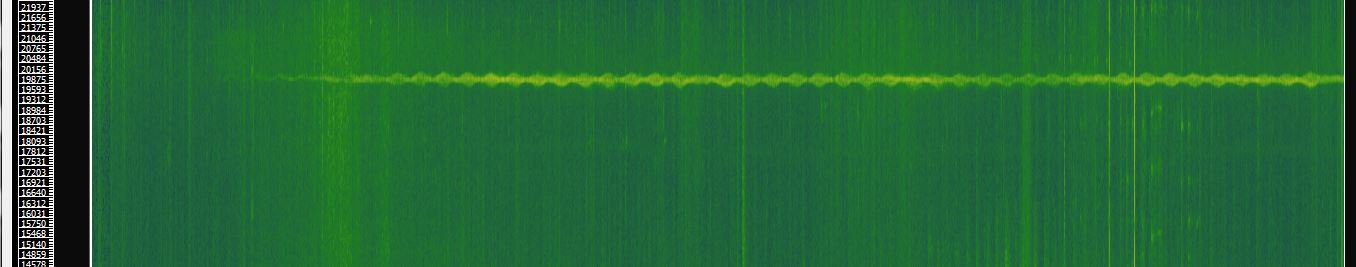

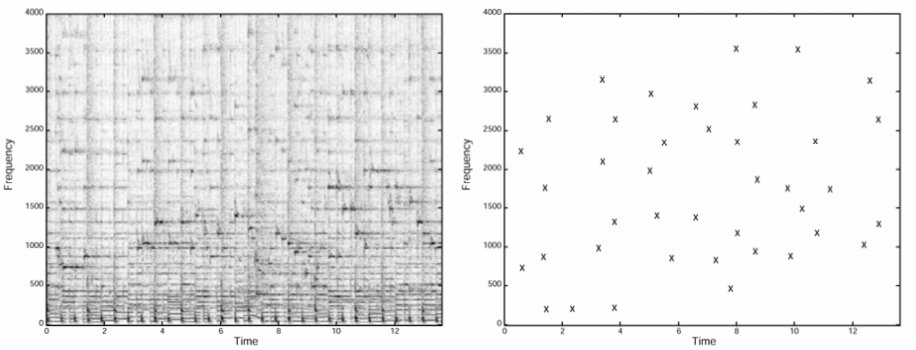

example detection method:

raw signal (record audio)

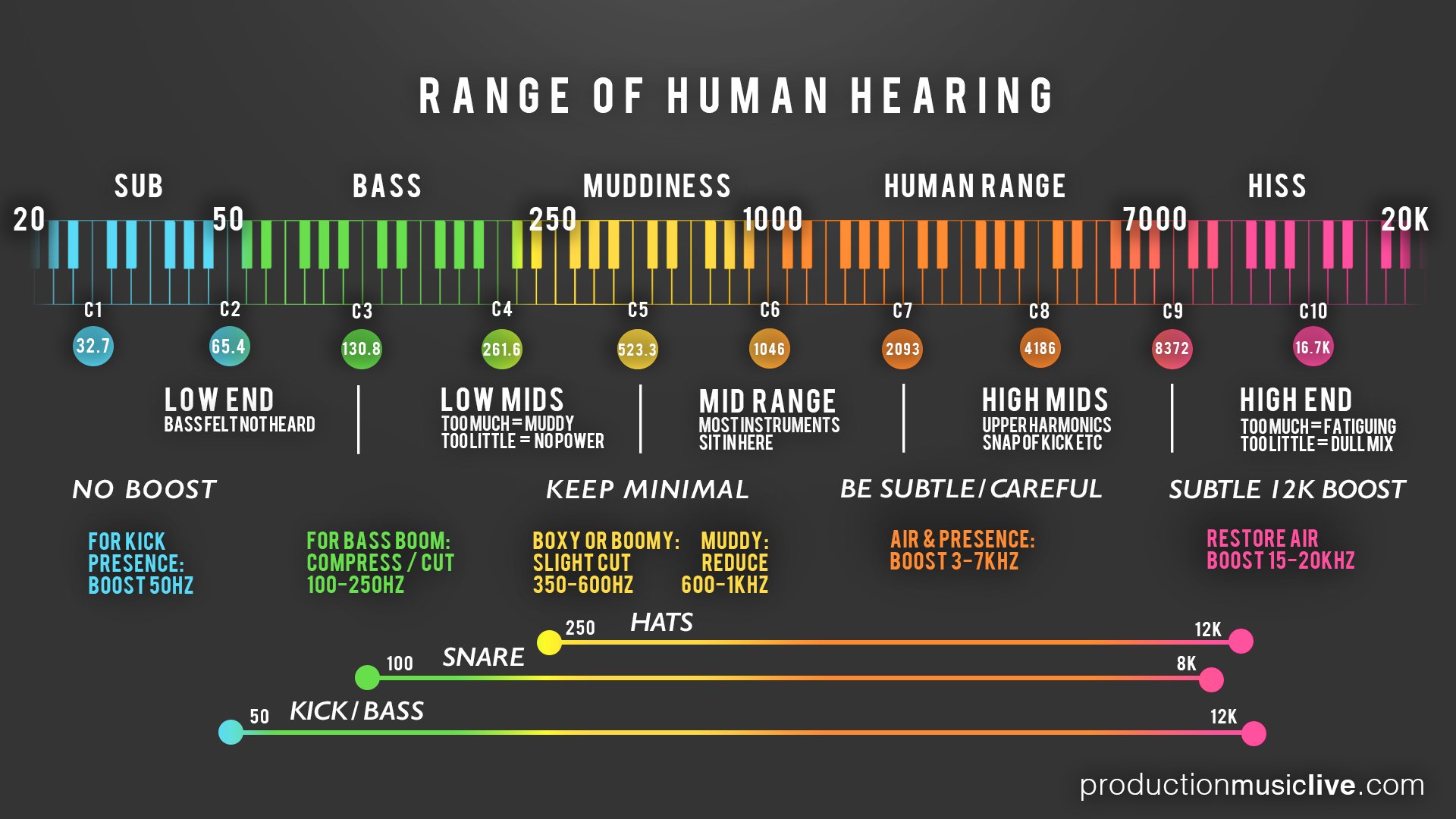

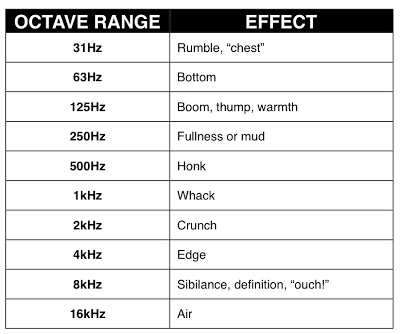

high pass filter (filter all but ~18kHz up)

matched filter (template of known signal)

Hilbert transform (to identify candidate "thin" peaks)

peak selection (for candidate timestamps for beacon signals)

decode each candidate

use Hamming to validate

discard those candidates with errors

public static final String KEY_ACR_DB_FILENAME = "acr_db_filename";

public static final String KEY_ACR_DB_FILE_ABS_PATH = "acr_db_file_abs_path";

public static final String KEY_ACR_DB_FILE_DIR = "acr_db_file_dir";

public static final String KEY_ACR_DB_SERVER_NAME = "acr_db_server_name";

public static final String KEY_ACR_DB_SERVER_PORT = "acr_db_server_port";

public static final String KEY_ACR_INSECURE_SERVER = "acr_db_insecure_server";

public static final String KEY_ACS_ACR_MODE = "acr_mode";

public static final String KEY_ACS_ACR_SHIFT = "acr_shift";

public static final String KEY_ACS_AUDIO_FILE_UPLOAD_FLAG = "audio_file_upload_flag";

private static final int RECORDER_AUDIO_BYTES_PER_SEC = 16000;

private static final int RECORDER_AUDIO_ENCODING = 2;

private static final int RECORDER_BIG_BUFFER_MULTIPLIER = 16;

private static final int RECORDER_CHANNELS = 16;

private static final int RECORDER_SAMPLERATE_44100 = 44100;

private static final int RECORDER_SAMPLERATE_8000 = 8000;

private static final int RECORDER_SMALL_BUFFER_MULTIPLIER = 4;

public static final byte ACR_SHIFT_186 = (byte) 0;

public static final byte ACR_SHIFT_93 = (byte) 1;

public static final int ACR_SPLIT = 2;

bitsound

public void a(int i) {

try {

this.d = new AudioRecord(6, this.b, 16, 2, i);

if (this.d.getState() == 1) {

try {

this.d.startRecording();

if (this.d.getRecordingState() != 3) {

b.c(a, "Audio recording startDetection fail");

this.d.release();

this.e = false;

return;

}

a(this.d);

this.e = true;

return;

cifrasoft

public static final int AUDIO_BUFFER_SIZE_MULTIPLIER = 4;

public static final int AUDIO_THREAD_STOP_TIMEOUT = 3000;

public static final int MAX_EMPTY_AUDIO_BUFFER_SEQUENTIAL_READS = 10;

this.SAMPLE_RATE = 44100;

private int readAudioData(int currentPcmOffset, byte[] pcm) {

AudioRecordService.handler.sendEmptyMessageDelayed(1, 3000);

int result = this.mAudioRecord.read(pcm, currentPcmOffset * 2, this.bufferLength * 2);

AudioRecordService.handler.removeMessages(1);

return result;

}

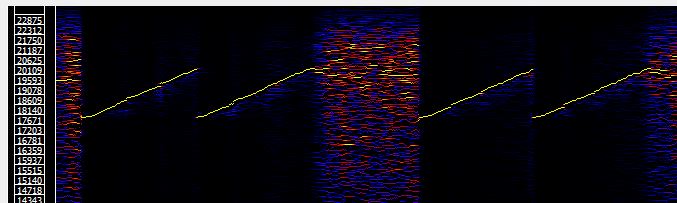

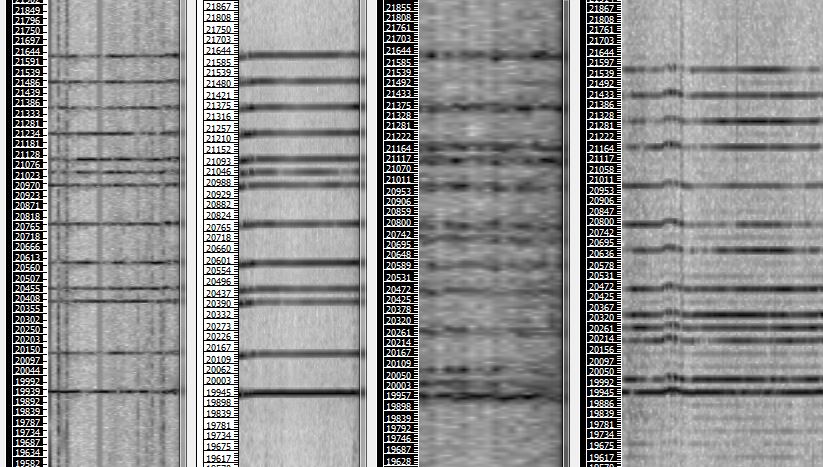

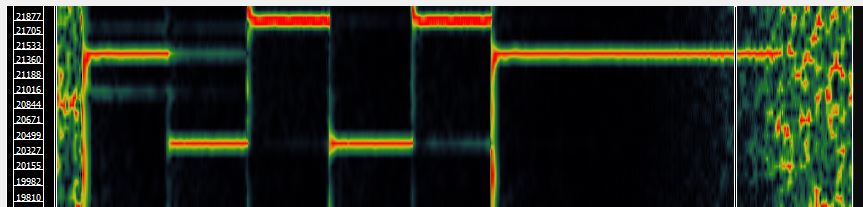

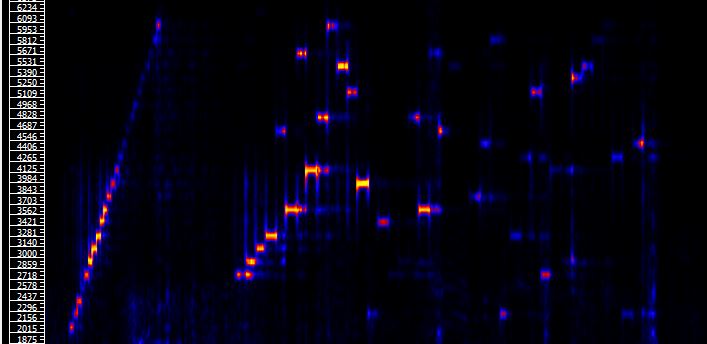

copsonic

"signalType": "ULTRASONIC_TONES",

"content" : {

"frequencies" : [ [18000, 20000, "TwoTones"] ]

"signalType": "ZADOFF_CHU",

"content": {

"config": {

"samplingFreq": 44100,

"minFreq": 18000,

"maxFreq": 19850,

"filterRolloff": 0.5,

"totalSignalTime": 0.3,

"nMsgSymbols": 2,

"filterSpan": 8

},

"set": {

"centralFreq": 18925,

"nElemSamples": 36,

"nSymbolElems": 181

dv (dov-e)

private void recorderWork() {

if (this.recordingActive) {

int bytesReadNumber = this.myRecorder.read(this.myBuffer, 0, this.myBuffer.length);

if (this.recordingActive) {

DVSDK.getInstance().DVCRxAudioSamplesProcessEvent(this.myBuffer, 0, bytesReadNumber / 2);

}

}

}

fanpictor

enum FNPFrequencyBand {

Default,

Low,

High

}

fidzup

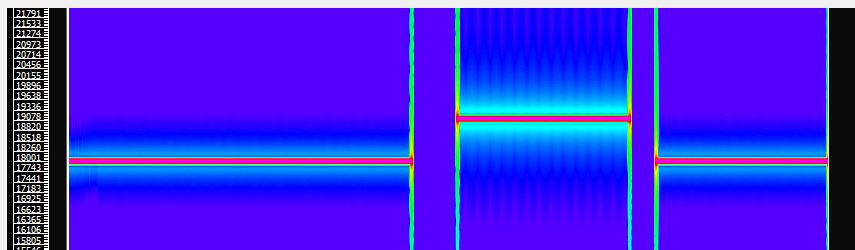

a. this.frequency = paramBasicAudioAnalyzerConfig.frequency; // 19000.0f

b. this.samplingFrequency = paramBasicAudioAnalyzerConfig.samplingRate; // 44100.0f

c. this.windowSize = paramBasicAudioAnalyzerConfig.windowSize; // 0x200 (512)

d. /* pulseDuration = 69.66f */

e. this.pulseWidth = Math.round(paramBasicAudioAnalyzerConfig.pulseDuration * (this.samplingFrequency / 1000.0F));

f. this.pulseRatio = paramBasicAudioAnalyzerConfig.pulseRatio; // 32.0f

/* signalSize = 0x20 (32)

g. this.signalPeriodPulses = paramBasicAudioAnalyzerConfig.signalSize;

h. this.bitCounts = paramBasicAudioAnalyzerConfig.bitcounts; // 0xb (11)

paramf.a = 19000.0F;

paramf.b = 44100.0F;

paramf.c = 512;

paramf.d = 69.66F;

paramf.e = 0.33333334F;

paramf.f = ((int)(paramf.d * 32.0F * 3.2F)); // 7133.184

paramf.g = 32;

paramf.h = new int[] { 15, 17, 19, 13, 11, 21, 23, 9, 7, 25, 27 };

fluzo

this.p = jSONObject.getInt("frame_length_milliseconds");

this.q = jSONObject.getInt("frame_step_milliseconds");

this.r = (float) jSONObject.getDouble("preemphasis_coefficient");

this.s = jSONObject.getInt("num_filters");

this.t = jSONObject.getInt("num_coefficients");

this.u = jSONObject.getInt("derivative_window_size");

instreamatic

private static final int BUFFER_SECONDS = 5;

private static int DESIRED_SAMPLE_RATE = 16000;

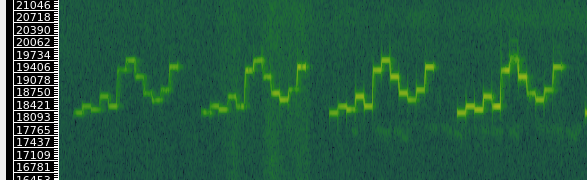

lisnr

// LisnrIDTone

public long calculateToneDuration() {

return ((long) (((double) (this.lastIteration + 1)) * 2.72d)) * 1000;

}

// LisnrTextTone

public long calculateToneDuration() {

return (long) (((this.text.length() * 6) * 40) + 1280);

}

// LisnrDataTone

public long calculateToneDuration() {

return (long) (((this.data.length * 6) * 40) + 1280);

}

AudioRecord audioRecord = new AudioRecord(0, d, 16, 2, 131072);

ArrayAudioPlayer.this.audioOutput = new AudioTrack(3, ArrayAudioPlayer.this.samplerate, 4, 2, 16000, 1);

ArrayAudioPlayer.this.audioOutput.play();

int written = 0;

while (!ArrayAudioPlayer.this.threadShouldStop) {

try {

if (ArrayAudioPlayer.this.buffer.getBufferLeftToRead() > 0) {

int size = ArrayAudioPlayer.this.buffer.getBufferLeftToRead();

written += size;

ArrayAudioPlayer.this.audioOutput.write(ArrayAudioPlayer.this.buffer.readFromBuffer(size), 0, size);

} else {

ArrayAudioPlayer.this.threadShouldStop = true;

}

} catch (IOException e) {

e.printStackTrace();

}

moodmedia

b = new AudioRecord(5, 44100, 16, 2, Math.max(AudioRecord.getMinBufferSize(44100, 16, 2) * 4, 32768));

this.b = Type.SONIC;

this.b = Type.ULTRASONIC;

if (num.intValue() == 44100 || num.intValue() == 48000)

this.j.setName("Demodulator");

this.k.setName("Decoder");

this.l.setName("HitCounter");

prontoly (sonarax)

contentValues.put("time", cVar.a);

contentValues.put("type", cVar.b.name());

contentValues.put(NotificationCompat.CATEGORY_EVENT, cVar.c);

contentValues.put("communication_type", cVar.d);

contentValues.put("sample_rate", cVar.e);

contentValues.put("range_mode", cVar.f);

contentValues.put("data", cVar.g);

contentValues.put("duration", cVar.h);

contentValues.put("count", cVar.i);

contentValues.put("volume", cVar.j);

realitymine

this.e = AudioRecord.getMinBufferSize(44100, 16, 2);

int i = this.e;

this.d = new byte[i];

this.c = new AudioRecord(1, 44100, 16, 2, i);

redbricklane (zapr)

AudioRecord localAudioRecord = new AudioRecord(1, 8000, 16, 2, 122880);

if (localAudioRecord.getState() == 1) {

this.logger.write_log("Recorder initialized", "finger_print_manager");

this.logger.write_log("Recording started", "finger_print_manager");

localAudioRecord.startRecording();

runacr

int minBufferSize = AudioRecord.getMinBufferSize(11025, 16, 2);

this.K = new AudioRecord(6, 11025, 16, 2, minBufferSize * 10);

shopkick

.field bitDetectThreshold:Ljava/lang/Double;

.field carrierThreshold:Ljava/lang/Double;

.field detectThreshold:Ljava/lang/Double;

.field frFactors:Ljava/lang/String;

.field gapInSamplesBetweenLowFreqAndCalibration:Ljava/lang/Integer;

.field maxFracOfAvgForOne:Ljava/lang/Double;

.field maxIntermediates:Ljava/lang/Integer;

.field minCarriers:Ljava/lang/Integer;

.field noiseThreshold:Ljava/lang/Double;

.field numPrefixBitsRequired:Ljava/lang/Integer;

.field numSamplesToCalibrateWith:Ljava/lang/Integer;

.field presenceDetectMinBits:Ljava/lang/Integer;

.field presenceNarrowBandDetectThreshold:Ljava/lang/Double;

.field presenceStrengthRatioThreshold:Ljava/lang/Double;

.field presenceWideBandDetectThreshold:Ljava/lang/Double;

.field useErrorCorrection:Ljava/lang/Boolean;

.field wideBandPresenceDetectEnabled:Ljava/lang/Boolean;

.field highPassFilterType:Ljava/lang/Integer;

Java_com_shopkick_app_presence_NativePresencePipeline_setDopplerCorrectionEnabledParam

Java_com_shopkick_app_presence_NativePresencePipeline_setHighPassFilterEnabledParam

Java_com_shopkick_app_presence_NativePresencePipeline_setWideBandDetectEnabledParam

Java_com_shopkick_app_presence_NativePresencePipeline_setNumPrefixBitsRequiredParam

Java_com_shopkick_app_presence_NativePresencePipeline_setPresenceDetectNarrowBandDetectThresholdFCParam

Java_com_shopkick_app_presence_NativePresencePipeline_setGapInSamplesBtwLowFreqAndCalibFCParam

Java_com_shopkick_app_presence_NativePresencePipeline_setCarrierThresholdFCParam

Java_com_shopkick_app_presence_NativePresencePipeline_setHighPassFilterTypeHPFParam

signal360 (sonic notify)

private static final int BUFFER_SIZE = 131072;

private static final int FREQ_STEPS = 128;

private static final int PAYLOAD_LENGTH = 48;

private static final int READ_BUFFER_SIZE = 16384;

public static final int SAMPPERSEC = 44100;

private static final int STEP_SIZE = 256;

private static final int TIMESTEPS_PER_CHUNK = 64;

private static final int USABLE_LENGTH = 256;

silverpush

for (int i : new int[]{4096, 8192}) {

AudioRecord audioRecord = new AudioRecord(1, 44100, 16, 2, i);

if (audioRecord.getState() == 1) {

audioRecord.release();

return i;

}

}

soniccode

// player

float[] decodeLocationFloat = decodeLocationFloat(str);

AudioTrack audioTrack = new AudioTrack(8, 44100, 2, 2, AudioTrack.getMinBufferSize(44100, 2, 2), 1);

this.audioGenerator = new STAudioGenerator();

this.audioGenerator.setAudioTrack(audioTrack);

this.audioGenerator.setAmplitude(this._amplitude);

this.audioGenerator.setBlockTime(this._blockTime);

this.audioGenerator.setFrequencies(decodeLocationFloat);

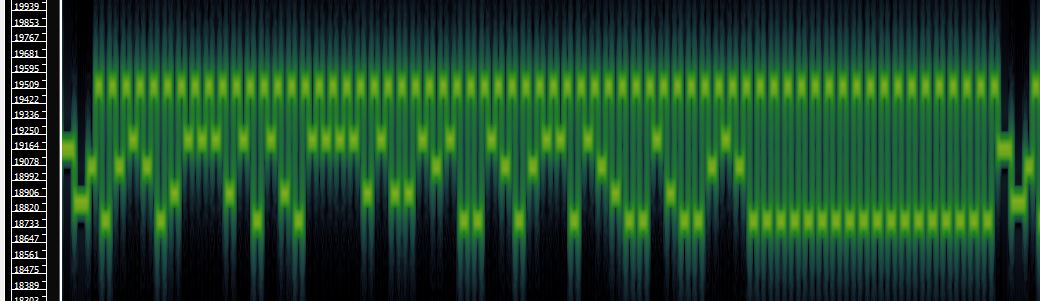

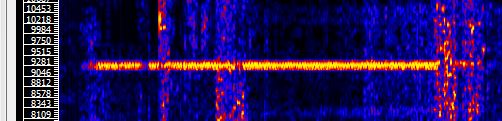

soundpays

this.a.a(18400.0f, 20000.0f);

this.a.a(new float[]{18475.0f, 18550.0f, 18625.0f, 18700.0f, 18775.0f, 18850.0f, 18925.0f, 19000.0f, 19075.0f, 19150.0f, 19225.0f, 19300.0f, 19375.0f, 19450.0f, 19525.0f, 19600.0f, 19675.0f, 19750.0f, 19825.0f, 19900.0f});

tonetag

if (stringBuilder2.matches("^[0-9]{1,5}[.][0-9]{1,2}$"))

this.aY = new AudioRecord(1, 44100, 16, 2, 50000);

private static native void initRecordingNative(int i, int i2, int i3, String str);

private static native void initRecordingUltraToneNative(int i, int i2, String str);

private native void processUltraFreqsNative(double[] dArr, String str);

_8KHZ(40),

_10KHZ(50),

_12KHZ(60),

_14KHZ(70),

_16KHZ(80),

_18KHZ(90);

trillbit

private static final int SAMPLE_RATE = 44100;

private static final String TAG = "AutoToneDetectorClass";

private static final int WINDOW_SIZE = 4096;

private static final int CHUNKS_AFTER_TRIGGER = 23;

private static final int CHUNKS_BEFORE_TRIGGER = 2;

private static final int CHUNK_SIZE = 4096;

this.recorder = new AudioRecord(this.AUDIO_SOURCE, SAMPLE_RATE, 16, 2, 4096);

int recorderState = this.recorder.getState();

Log.i(TAG, "RECORDER STATE : " + String.valueOf(recorderState));

if (recorderState == 1) {

try {

this.recorder.startRecording();

Log.i(TAG, "Recording Started");

startAsyncTasks();

} catch (Exception e) {

e.printStackTrace();

}

}

try {

data = new DataPart("temp.mp3", UploadToServer.this.getDatafromFile(str));

} catch (IOException e) {

e.printStackTrace();

}

params.put("mp3file", data);

return params;

Log.d("V2", "Sending Information to backend");

js.put("Device", Build.MANUFACTURER + " " + Build.MODEL);

js.put("audio_chunks", jsonArr);

js.put("MIC_SRC", "MIC");

js.put("FREQ_PLAYED", "3730");

js.put("MIN_BUFFER", "4096");

int[] original = new int[]{5, 5, 1, 2, 3, 4};

jSONObject2.put("edTy", d);

jSONObject2.put("edSNRdB", d2);

jSONObject2.put("edTyLower", d3);

jSONObject2.put("edSNRdBLower", d4);

jSONObject2.put("nuclearNormLower", d5);

jSONObject2.put("edTyUpper", d6);

jSONObject2.put("edSNRdBUpper", d7);

jSONObject2.put("nuclearNormUpper", d8);

if (this.p == 2) {

jSONObject2.put("edPass", z ? 1 : 0);

jSONObject2.put("edEnergyRatioArraydB", jSONArray);

}

cifrasoft

private static double[] filter(double[] xx) {

int n = xx.length;

double[] x = new double[n];

double[] b = new double[]{0.0711d, -0.1422d, 0.0711d};

double[] a = new double[]{1.0d, 1.1173d, 0.4016d};

x[0] = b[0] * xx[0];

x[1] = ((b[0] * xx[1]) + (b[1] * xx[0])) - (a[1] * x[0]);

for (int i = 2; i < n; i++) {

x[i] = ((((b[0] * xx[i]) + (b[1] * xx[i - 1])) + (b[2] * xx[i - 2])) - (a[1] * x[i - 1])) - (a[2] * x[i - 2]);

}

return x;

}

public static boolean isUltrasoundDetected(short[] aSamples) {

if ($assertionsDisabled || aSamples.length >= 2205) {

int i;

double[] x = filter(normalise(Arrays.copyOfRange(aSamples, 0, 2205)));

double[] y = new double[2205];

int zeroIndex = 1102 + 1;

double[] f = new double[zeroIndex];

for (i = 0; i < zeroIndex; i++) {

f[i] = (((double) 22050) * (((double) (i + i)) + 0.0d)) / ((double) 2205);

}

FFT.fft(x, y);

for (i = 0; i < 2205; i++) {

x[i] = (2.0d * ((x[i] * x[i]) + (y[i] * y[i]))) / ((double) 4862025);

}

double sum = 0.0d;

double max = Double.MIN_VALUE;

for (i = 0; i < zeroIndex; i++) {

max = Math.max(max, x[(i + 1103) - 1]);

if (f[i] >= 18000.0d) {

sum += x[(i + 1103) - 1];

}

}

if ((sum / ((double) zeroIndex)) / max < fThreshold) {

return true;

}

return false;

}

throw new AssertionError();

}

DV (Dov-e)

private void processAudio(short[] buffer, int nbOfShorts) {

int count = 0;

int bufIndex = 0;

while (bufIndex < nbOfShorts) {

count = Math.min(nbOfShorts - bufIndex, this.samplesBufCountdown);

this.goertzel.processShorts(buffer, bufIndex, count);

this.samplesBufCountdown -= count;

if (this.samplesBufCountdown <= 0) {

if (this.goertzel.sampleCount >= this.goertzel.sampleCountMax) {

addMagnitude((float) this.goertzel.getMagnitude());

}

this.goertzel.init();

if (this.samplesBufSavedCount > 0) {

this.goertzel.processShorts(this.samplesBuf, 0, this.samplesBufSavedCount);

this.samplesBufSavedCount = 0;

}

this.goertzel.processShorts(buffer, bufIndex, count);

this.samplesBufCountdown = (int) (((float) this.windowSize) * 0.5f);

}

bufIndex += count;

}

if (this.samplesBufCountdown != ((int) (((float) this.windowSize) * 0.5f))) {

System.arraycopy(buffer, bufIndex - count, this.samplesBuf, 0, count);

this.samplesBufSavedCount = count;

}

}

Fluzo

public static b a() {

b bVar = new b();

bVar.a = 16000;

bVar.b = 300;

bVar.c = 25;

bVar.d = 0.97f;

bVar.e = 40;

bVar.f = 25;

bVar.g = 5;

return bVar;

}

Hotstar

public native byte[] assembleDataPacket(byte[] bArr);

public native byte[] assembleTextPacket(char[] cArr);

public native int measureModulatedSamples(byte[] bArr, int i);

public native double modulatePacketBytes(byte[] bArr, byte[] bArr2, int i, double d);

public native void processPcmData(short[] sArr, int i);

Moodmedia

public static final DataRangeMode DEFAULT_DATA_RANGE_MODE = DataRangeMode.LONG;

public static final SymbolRangeMode DEFAULT_SYMBOL_RANGE_MODE = SymbolRangeMode.LARGE;

public static final float DEFAULT_VOLUME = 0.75f;

public static final int FOREVER = -1;

public static final float IMMUTABLE_VOLUME = -1.0f;

private static DataRangeMode e = DEFAULT_DATA_RANGE_MODE;

private static SymbolRangeMode f = DEFAULT_SYMBOL_RANGE_MODE;

private static Channel g = Channel.CHANNEL_ONE;

RealityMine

public static final int MAX_FILES_UPLOAD = 10;

private static final int RECORDER_AUDIO_ENCODING = 2;

private static final int RECORDER_CHANNELS = 16;

private static final int RECORDER_SAMPLERATE = 8000;

private int MAX_UPLOAD_FAILURE_COUNT = 3;

runacr

params.bitDetectThreshold = flags.pdFreqCodingBitDetectThreshold;

params.gapInSamplesBetweenLowFreqAndCalibration = flags.pdFreqCodingGapInSamplesBetweenLowFreqAndCalibration;

params.maxFracOfAvgForOne = flags.pdFreqCodingMaxFreqOfAvgForOne;

params.numSamplesToCalibrateWith = flags.pdFreqCodingNumSamplesToCalibrateWith;

params.presenceDetectMinBits = flags.pdFreqCodingPresenceDetectMinBits;

params.presenceNarrowBandDetectThreshold = flags.pdFreqCodingPresenceNarrowBandDetectThreshold;

params.presenceStrengthRatioThreshold = flags.pdFreqCodingPresenceStrengthRatioThreshold;

params.presenceWideBandDetectThreshold = flags.pdFreqCodingPresenceWideBandDetectThreshold;

params.wideBandPresenceDetectEnabled = flags.pdWideBandDetectEnabled;

params.useErrorCorrection = flags.pdFreqCodingUseErrorCorrection;

params.frFactors = flags.pdFreqCodingFrFactors;

params.numPrefixBitsRequired = flags.pdPrefixBitsRequired;

params.minCarriers = flags.pdFreqCodingMinCarriers;

params.maxIntermediates = flags.pdFreqCodingMaxIntermediate;

params.carrierThreshold = flags.pdFreqCodingCarrierThreshold;

params.detectThreshold = flags.pdFreqCodingDetectThreshold;

params.noiseThreshold = flags.pdFreqCodingNoiseThreshold;

Signal360/sonic notify

public long processBuffer(int byteCount) {

int stepCount = byteCount / 256;

long code = 0;

for (int ii = 0; ii < stepCount; ii++) {

int offset = ii * 256;

for (int q = 0; q < 256; q++) {

this.mSamples[q] = this.mData[offset + q];

}

this.mSamplesBuffer.position(0);

this.mSamplesBuffer.put(this.mSamples);

long tempCode = processSamplesAtTime(this.mSamplesBuffer, this.base_timestamp + bytes2millis(offset * 2));

if (tempCode > 0) {

if (this.mService.useCustomPayload()) {

int customPayload = getCustomPayload();

Log.d(TAG, "Heard signal " + tempCode + " customPayload " + customPayload);

code = tempCode;

this.mService.heardCode(new SignalAudioCodeHeard(code, null, Integer.valueOf(customPayload)));

} else {

long timeInterval = getTimeIntervalRel(System.currentTimeMillis());

Log.d(TAG, "Heard signal " + tempCode + " timeInterval " + timeInterval);

code = tempCode;

this.mService.heardCode(new SignalAudioCodeHeard(code, Long.valueOf(timeInterval), null));

}

}

}

return code;

}

Silverpush

int i3 = 18000;

i = 0;

double d = 0.0d;

while (i3 < 20000) {

f fVar = new f((float) this.g, (float) i3, dArr);

fVar.a();

double c = fVar.c();

if (c > d) {

i = i3;

d = c;

}

int i4 = c > ((double) a.c) ? i2 + 1 : i2;

if (i4 > 1) {

a.c += 3000;

i3 = i;

i = i4;

if (i == 1 && r4 > ((double) a.c)) {

publishProgress(new Integer[]{Integer.valueOf(i3)});

return;

}

}

Soniccode

public static native void initFFTUltraTones(int i);

public native void FFTMusicalTones(double[] dArr, double[] dArr2);

public native void FFTMusicalTones16Byte(double[] dArr, double[] dArr2);

public native void FFTNormal(double[] dArr, double[] dArr2);

public native void FFTUltraTones(double[] dArr, double[] dArr2);

public native void initFFTMusicalTones(int i);

public native void initFFTMusicalTones16Byte(int i);

private void decodeAudio() {

this.finalAudioChunks = get_audio_chunks_from_array(this.audioChunks, 4096);

this.start_point_1[0][0] = 15000;

this.start_point_1[0][1] = 25000;

this.start_point_2[0][0] = 40000;

this.start_point_2[0][1] = 30000;

this.start_point_3[0][0] = 70000;

this.start_point_3[0][1] = 25000;

this.finalAudioChunks = get_audio_chunks_from_array(this.audioChunks, 4096);

this.start_point_1[0][0] = 0;

this.start_point_1[0][1] = 30000;

this.start_point_2[0][0] = 30000;

this.start_point_2[0][1] = 30000;

this.start_point_3[0][0] = 60000;

this.start_point_3[0][1] = 30000;

STMFPreambleFreqIndex {

_17P00KHZ,

_17P25KHZ,

_17P50KHZ,

_17P75KHZ,

_18P00KHZ,

_18P25KHZ,

_18P50KHZ,

_18P75KHZ

}

public enum DEVICE_INFO_TYPE {

OS,

OS_VERSION,

MAKE,

MODEL,

SCREEN_WIDTH,

SCREEN_HEIGHT,

LANGUAGE,

HARDWARE_VERSION,

PPI_SCREEN_DENSITY,

APP_BUNDLE,

APP_NAME,

APP_VERSION,

CARRIER,

CONNECTION_TYPE,

ORIENTATION

}

lisnr

DATASET_ADVT_ID = "advertising_id";

DATASET_APP_USAGE_FREQ = "app_use_freq";

DATASET_CONNECTIVITY_TYPE = "network_type";

DATASET_COUNTRY = "country_code";

DATASET_DATA_RECEIVED_BYTES = "recieved_bytes";

DATASET_DATA_RECEIVED_BYTES_MOBILE = "recieved_mobile_bytes";

DATASET_DATA_RECEIVED_BYTES_WIFI = "recieved_wifi_bytes";

DATASET_DATA_TRANSMITTED_BYTES = "transmitted_bytes";

DATASET_DATA_TRANSMITTED_BYTES_MOBILE = "transmitted_mobile_bytes";

DATASET_DATA_TRANSMITTED_BYTES_WIFI = "transmitted_wifi_bytes";

DATASET_DEVICE_LANG = "device_lang";

DATASET_DEVICE_STORAGE_MEMORY_FREE = "storage_available_size_mb";

DATASET_DEVICE_STORAGE_MEMORY_TOTAL = "storage_total_size_mb";

DATASET_DEVICE_UPTIME = "up_time";

DATASET_IMEI = "imei";

DATASET_INSTALLED_APPS = "installed_packages";

DATASET_LOCATION_LAT_LONG = "location";

DATASET_NUMBER_OF_PHOTOS = "photos_count";

DATASET_OPERATOR_CELL_LOCATION = "operator_cell_location";

DATASET_OPERATOR_CIRCLE = "operator_circle";

DATASET_OPERATOR_NAME = "operator";

PREF_BATTERY_CHECK = "batteryCheck";

jSONObject2.put("bundle", this.q);

jSONObject2.put("androidId", this.v);

jSONObject2.put("advtId", this.u);

jSONObject2.put("advtLmt", this.w);

jSONObject2.put("sdkVer", this.t);

jSONObject2.put("appName", this.s);

jSONObject2.put("appVer", this.r);

jSONObject2.put("osForkName", this.x);

jSONObject2.put("devHwv", this.A);

jSONObject2.put("devMake", this.z);

jSONObject2.put("devModel", this.y);

jSONObject2.put("apiLevel", this.D);

jSONObject2.put("isRooted", this.I);

jSONObject2.put("hasAudioPerm", this.E);

jSONObject2.put("hasLocPerm", this.F);

jSONObject2.put("hasPhStatePerm", this.H);

jSONObject2.put("hasStoragePerm", this.G);

jSONObject2.put("devDensityPpi", this.B);

jSONObject2.put("osForkVer", this.C);

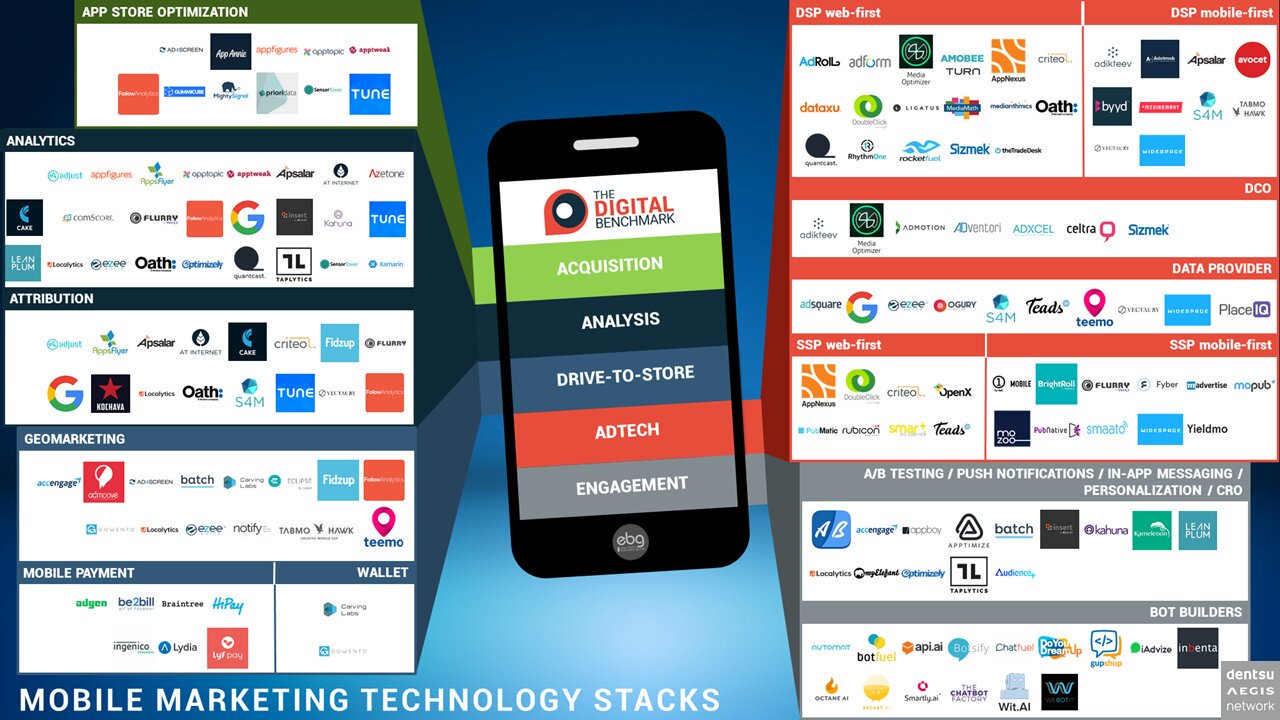

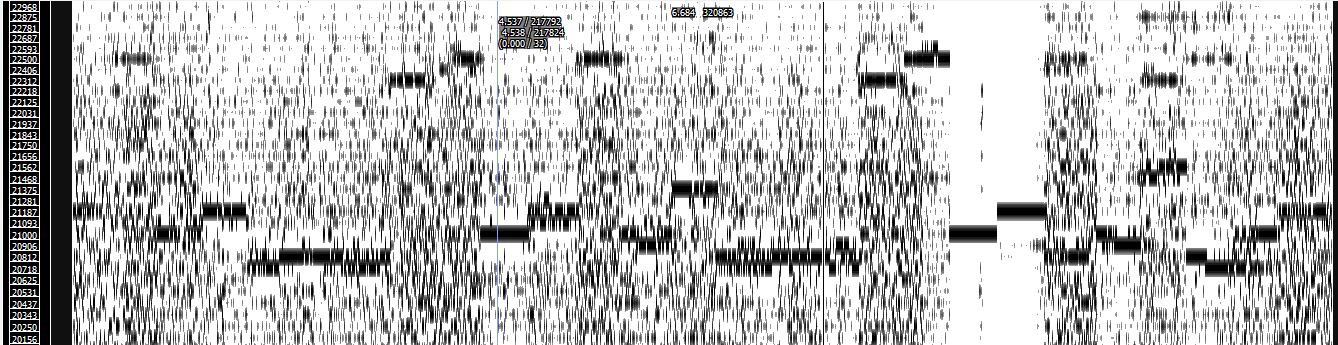

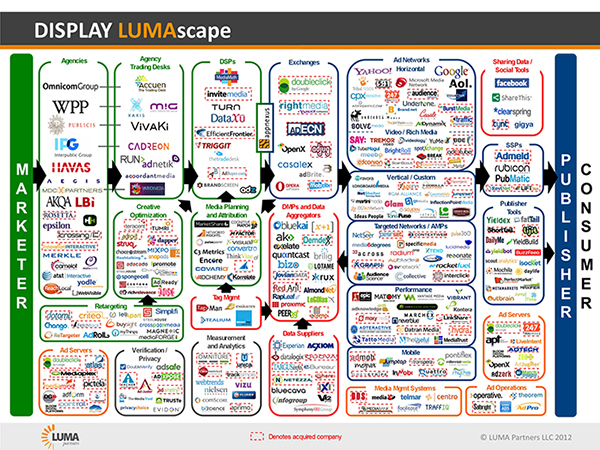

"/system/app/Super user.apk", "/sbin/su", "/system/bin/su", "/system/xbin/su", "/data/local/xbin/su", "/data/local/bin/su", "/system/sd/xbin/su", "/system/bin/failsafe/su", "/data/local/su", "/su/bin/su"  Example of advertising technology companies network

Example of advertising technology companies network